Introduction

Latency is time taken to finish task.Throughput is number of tasks finished in given time.

If you take 1 minute to read a page. Your latency is 1 minute and throughput is 60 PPH (Pages per hour).

Aspects of Caching

Caching can be done in many layers; client side, server side or at dedicated (separate) cache layer.

There are options available for cahing in following layers,

- Database

- Web server

- Applciation server

- Browser (or Client Side)

- Distributed Caching

- CDN (Content Delivery Network)

We need to consider different aspects while choosing the right caching mechanism. For example, quantity of data to be loaded, which data to put, refresh frequency of the data, expiry of the unused data (eviction), our cache should not face cold start or celebrity problem (if distributed) etc.

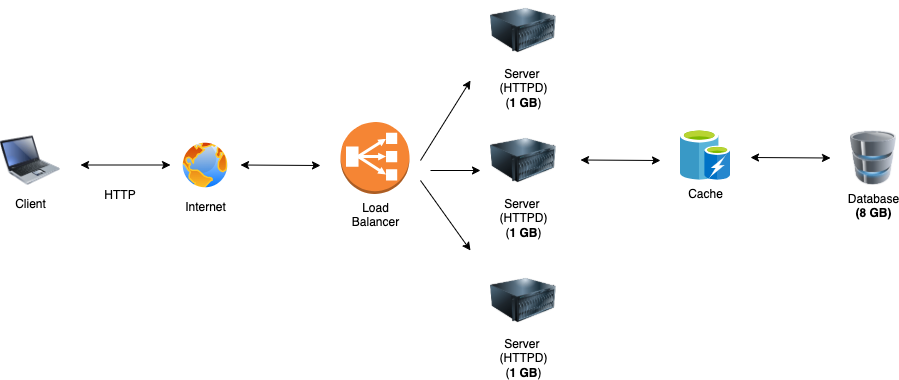

Following are different strategies which we can use to update (refresh) the data stored in cache. Image clarifies what is the placement of cache and closely looking at arrow gives idea of data flow.

- Cache Aside

- Write Through

- Write Behind

- Refresh Ahead

- LRU (Least Recently Used)

- LFU (Least Frequently Used)

- FIFO (First In First Out)