Introduction

Resilience can be defined as an ability of the system to recover from the failures, disruptions or any kind of event which impacts the proper functioning.

In real world, any system is likely going to fail once a while due to various known or unknown reasons. We need to see what we can do to make our system get over with such situation, how we can avoid any harm to the system and how we can get back to the business quickly.

Approach

Resilience can be achieved using different approaches listed below,

Fault Tolerance

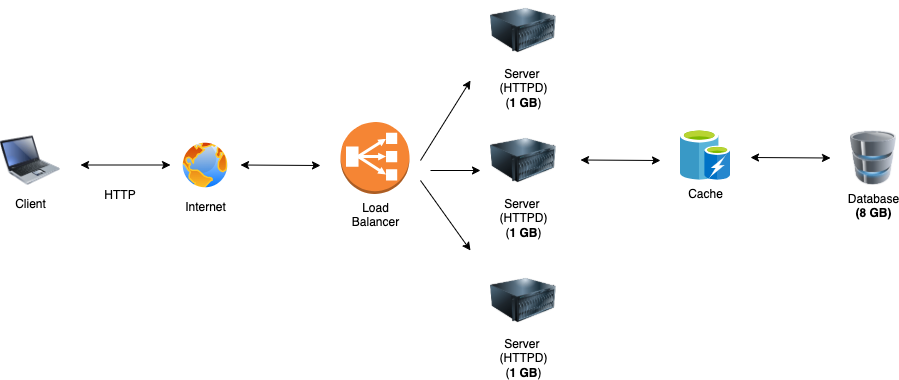

System continues to work even if any software or hardware fails (fully / partially).

e.g. In load balancing if one server fails, another server is created or load is distributed in remaining instnaces.

Redundancy

Redundancy or duplication ensures backup and also helps in recovering from failure quickly.

e.g. Create another instance using database replication and use it if original instance fails.

Monitoring

Continuos tracking and monitoring helps in early detection of problems.

e.g. Continuously track the system to ensure expected health and take automatic actions or alert if given health criteria is not met.

Disaster Recovery

Restore the system back after any disaster.

e.g. Regular back ups to avoid data loss or keep it to minimum.

Self Healing

System itself can automatically correct it if any kind of issues.

e.g. Automatic scaling of AWS, if one instance fails automatically create new instance and transfer trafic of unhealthy one to new instance.